The puzzle

A model diagnoses a rare autoimmune condition. The reasoning unfolds over several steps — symptoms weighed, differential diagnoses considered and eliminated, a final judgment reached. Each step follows from the last. The output reads like a clinical case conference: structured, convergent, disciplined.

But no rule was applied. No diagnostic algorithm was stored and triggered. No premise was held in working memory while an inference rule operated on it. The model has no beliefs. It has no explicit logic. It has statistical regularities learned from text — and from those regularities, something that looks like reasoning emerged.

The classical picture of inference has held for centuries. An agent holds premises. The agent applies a rule. A conclusion follows. Logic specifies which transitions are valid. Inference is the movement; logic is the law.

Three pressures have been building against this picture. Cognitive science shows that much human reasoning occurs without explicit rule application — pattern recognition, embodied know-how, fast intuitive judgment that resists propositional reconstruction. Philosophy faces an awkward question: if inference is governed by laws of thought, why do classical, intuitionistic, paraconsistent, and relevance logics each have defensible claims to characterize valid reasoning? If the laws are immutable, there should be one set. There are many.

But the sharpest pressure comes from LLMs. No beliefs. No rules stored anywhere. No personal-level agency. Yet structured, task-appropriate reasoning emerges — reasoning that adapts its character to the problem it faces. If inference requires rules, these systems cannot infer. But they do. So either inference does not require rules, or what we call inference is not what we thought it was.

The settling

What if reasoning is not rule-following but settling?

When the model processes a medical case, it does not apply diagnostic rules and derive a conclusion. It settles into a state where the evidence, the domain patterns, and the output cohere. When it processes a legal case, it settles into a different kind of state — one where multiple frameworks are maintained simultaneously. The difference is not in the rules. There are no rules. The difference is in the kind of stability the problem demands.

What determines the settling is not a rule but a stance — a set of commitments about what to preserve, what to eliminate, and what to tolerate. Classical logic demands that contradictions be eliminated: encounter both A and not-A, and everything follows. Every proposition becomes derivable. The system destroys its own capacity to distinguish anything. Paraconsistent logic takes a different stance: contradictions can persist locally without infecting everything else. The system contains the damage. Intuitionistic logic demands construction — you cannot assert that something is true or false unless you can show which. Each stance produces different stable outcomes from the same starting material.

These are not competing descriptions of how thought works. They are different settings for the same process. Changing the logic is like changing a machine's operating parameters, not like discovering a different kind of machine.

If inference were governed by immutable laws — if the rules of thought were discovered the way the laws of physics are discovered — there would be one logic, the way there is one gravitational constant. The existence of multiple defensible logics is what you would expect in a domain governed by revisable stances rather than by the structure of reality itself.

The two regimes

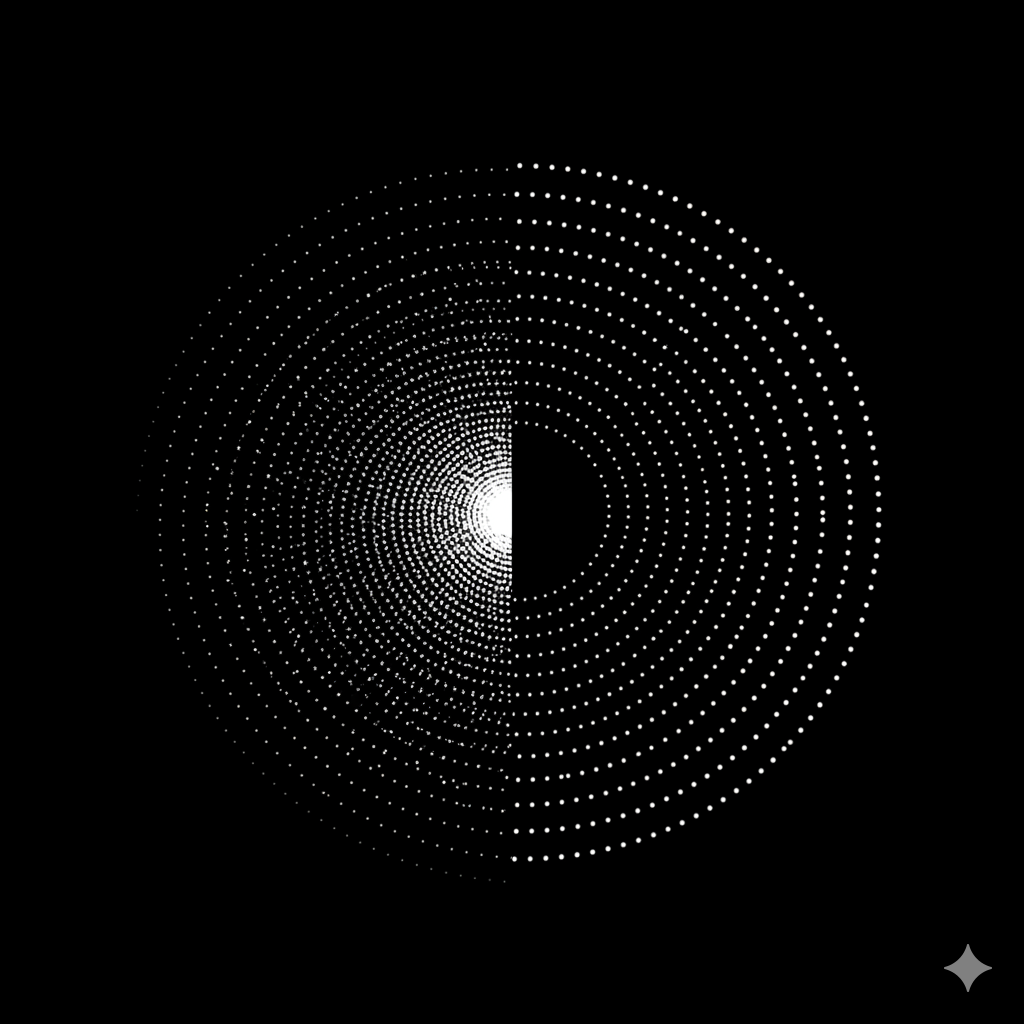

Thirty-six reasoning tasks across seven domains — medical diagnosis, legal reasoning, policy evaluation, engineering failure analysis, technical debugging, business valuation, sports officiating. The model receives evidence one piece at a time. At each stage, it outputs a probability distribution over alternative explanations. Track how its confidence evolves as the evidence accumulates. What emerges is not a continuum. It is two sharply distinct patterns with nothing in between.

In the first pattern — call it convergence — the model narrows rapidly toward a single answer. Evidence eliminates alternatives until one remains. Medical diagnosis works this way. A patient presents symptoms. Lab results arrive. Imaging confirms. The space of plausible diagnoses shrinks at every stage until one survives. Engineering failure analysis works the same way. Technical debugging likewise. In every case, the problem has a single hidden cause, and the evidence progressively reveals it.

In the second pattern — call it equilibrium — the model maintains multiple alternatives without resolving them. Evidence modulates the distribution but never collapses it. Legal interpretation works this way. The same traffic accident, analyzed under a negligence framework and under a strict liability framework, yields different conclusions — both defensible, neither decisive. Business valuation works the same way. Policy evaluation likewise. The problem does not have a single correct answer. It has multiple defensible frameworks, and the evidence does not choose between them.

The gap between these patterns is enormous. The effect size is approximately 4.0, where 0.8 is conventionally "large." No cases fall in between. And the pattern tracks problem structure, not domain label — a policy question with clear causal structure converges; a sports question with interpretive ambiguity does not.

The same model. The same architecture. The same weights. Two qualitatively different modes of operation — not because it follows different rules in each case, but because it stabilizes differently.

The load

What the equilibrium regime reveals is that contradiction can carry weight.

In the convergence regime, contradiction is noise. Encountering evidence for diagnosis A alongside evidence for diagnosis B is a signal to investigate further — to find the test that eliminates one. The system is narrowing. Contradiction is what remains to be resolved.

In the equilibrium regime, contradiction is the structure. The model reasons under the negligence framework AND under the strict liability framework. Both produce conclusions. The conclusions are incompatible. The model holds them open because the problem requires it.

One case ended with a perfectly uniform distribution across five alternatives. Maximum uncertainty. The problem had five defensible answers and no decisive test to separate them. The appropriate response to irreducible interpretive ambiguity is maintenance.

This reframes what we see when an LLM holds incompatible positions. From the classical perspective, contradiction is always failure — a sign that something has gone wrong, that a premise needs revision, that the system has broken down. But a system that forced convergence on the legal case — that collapsed the negligence and strict liability frameworks into a single answer — would not be reasoning better. It would be reasoning worse. It would be imposing a structure the problem does not have.

Premature convergence is the error, not the ambiguity. The tension between incompatible frameworks is load-bearing. The equilibrium regime uses that tension to navigate a space where forcing a single answer would destroy information.

The snap

If reasoning is settling, why does deduction feel different?

"All men are mortal; Socrates is a man; therefore Socrates is mortal." This feels compelled. Necessary. The conclusion snaps into place with a force that other reasoning does not have. If inference is just settling into stable states, where does that feeling come from?

From resilience. Modus ponens settles under virtually every admissible stance. Classical logic validates it. Intuitionistic logic validates it. Relevance logic validates it. Nearly every admissible stance does. Change the settings, and this particular inference comes back every time. The snap of logical necessity is the experience of an inference that survives every perturbation — a settling so robust that it feels like it could not be otherwise.

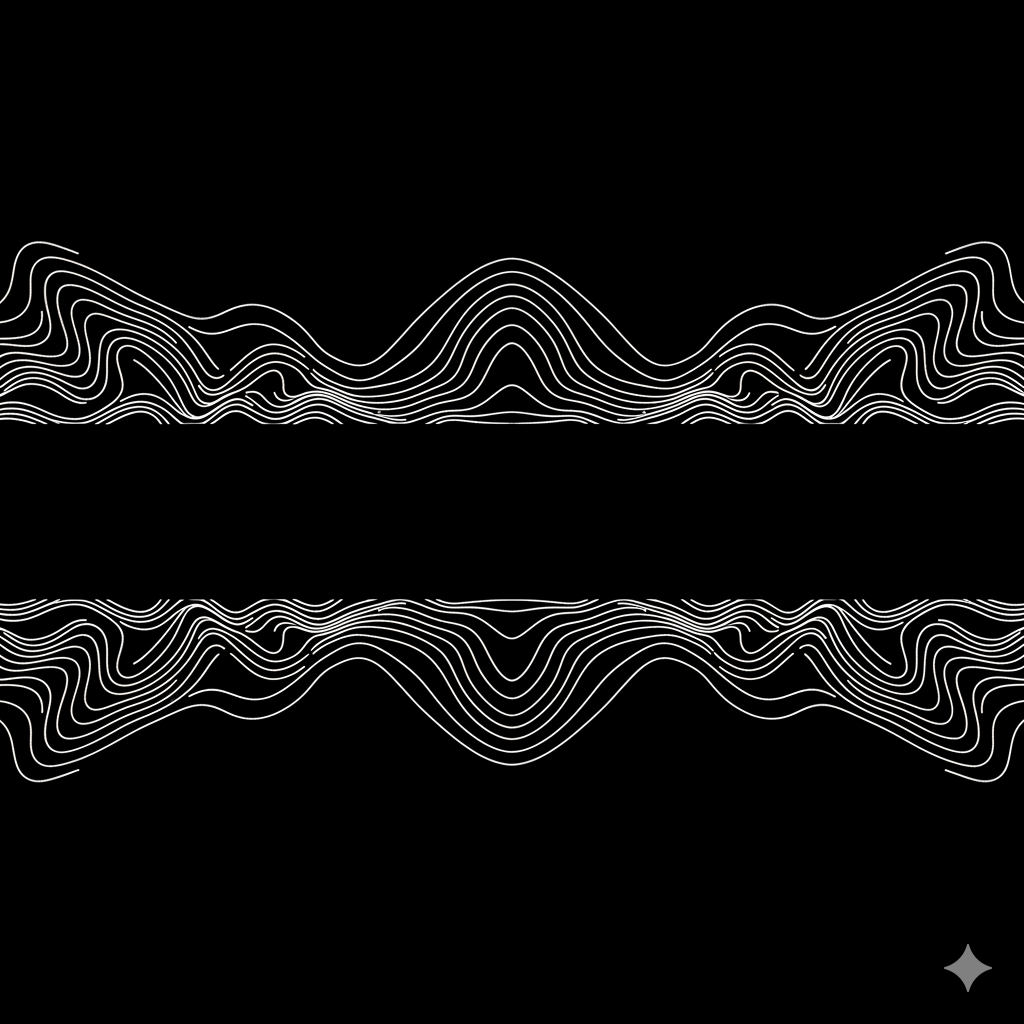

A limit case of the same operation. Where the settling is maximally robust, it feels like law. Where it is fragile, it feels like judgment. Where it is contested, it feels like interpretation.

The gradient runs from deduction — everything frozen except the consequence, maximum constraint — through abduction — revision in play, the system proposing new explanations — through paradigm shift — the fundamental commitments themselves open to revision — to creative work, where the maximum number of parameters are unfrozen. These are not different kinds of thought. They are the same process with different amounts of freedom. What varies is not the mechanism. What varies is how much is locked down when the system runs.

The question

One thing remains unexplained: the gap.

If reasoning varies along a gradient, you would expect behavior to spread across a continuum — some tasks more convergent, some more equilibrial, most somewhere in between. Instead, behavior clusters into two modes with empty space between them. The bimodal separation is sharp. Why?

The speculation — and it is a speculation — is that the equilibrium regime requires a structural capacity the convergence regime does not. To hold "under framework A, X follows" and "under framework B, not-X follows" simultaneously, without the contradiction destroying the system, you need a way to bind inferences to contexts. You need something that says: this conclusion holds here, not everywhere. This is structurally similar to what logicians call modal operators — formal devices for quarantining what holds in one context from what holds in another.

You either need that structure or you do not. If the problem has a single hidden cause, you do not — you just converge. If the problem requires maintaining incompatible frameworks, you do — and the system must build that binding on the fly. The gap may be categorical because the capacity is categorical. There is no halfway point between needing to quarantine contradictions and not needing to.

This connects the empirical finding to deep questions in formal logic — the study of how possibility, necessity, and context interact. The data meets the theory at a point neither anticipated alone.

Reasoning is not about having the right rules. It is about settling into the right kind of stability for the problem you face. LLMs show us this because they have no rules to fall back on. What they have — what any reasoning system has — is the capacity to settle. And the way a system settles tells you more about the problem than about the system.

Two regimes of sequential reasoning in LLMs were characterized at UNILOG 2025 (Cusco, Peru). A fuller account of inference as stabilization will appear in a forthcoming monograph.